It is important to know the effective data throughput performance and rates when using a TCP/IP VPN tunnel created with PPP over an SSH session. I will take a stepwise approach in explaining how I determined these rates using NST probe systems, various networking equipment, and software benchmarking tools. I tried to follow many of the methods described in RFC 2544 "Benchmarking Methodology for Network Interconnect Devices".

To get started some background information is appropriate. Communication between computers using TCP/IP takes place through the exchange of packets. A packet is a PDU (Protocol Data Unit) at the IP layer. The PDU at the TCP layer is called a segment while a PDU at the data-link layer (such as Ethernet) is called a frame. However the term packet is generically used to describe the data unit that is exchanged between TCP/IP layers as well as between two computers.

First, one needs to know the maximum performance of the network environment to establish a baseline value. I will be using a switched Fast Ethernet (IEEE 802.3u) network configuration. I chose this configuration because it is a common network topology used in today's enterprise internetworking environments.

Frames per second (FPS) or Packets per second (PPS) is a common method of rating the throughput performance of a network device. Understanding how to calculate frames per second can provide a lot of insight on how the Ethernet system functions and assists you in network design.

In this section we will calculate the theoretical maximum frames per second of an Fast Ethernet (100Mb/sec) segment. The calculation is considered theoretical because it requires a network segment without collisions and a standard packet length. Today's network switches allow us to achieve these theoretical limits by providing Full Duplex capability. Also, Ethernet data size relies on the upper layer application, so it is very unlikely to find a stream of packets with exactly the same size in an Ethernet network.

Figure 6.7, “VPN: PPP tunneled over SSH: Fast Ethernet Maximum Throughput Rates” shows the components that make up a Fast Ethernet TCP/IP datagram frame. The minimum frame payload is 46 Bytes (dictated by the slot time of the Ethernet LAN architecture). The maximum frame payload is 1500. The maximum frame rate is achieved by a single transmitting node which does not therefore suffer any collisions. This implies a frame consisting of 72 Bytes with a 960 nanosecond inter-frame gap (corresponding to 12 Bytes at 100Mb/sec).

**Note: Typically protocol analyzers or network monitoring tools do not capture the Ethernet's "Frame Check Sequence" or (Cyclic Redundancy Check (CRC)). The minimum frame length is usually reported as 60 bytes and the maximum length is reported at a 1514 bytes (using the normal Ethernet Maximum Transmission Unit (MTU) = 1500 (RFC 894)).

The calculation for the maximum framing rate of Fast Ethernet is also shown in Figure 6.7, “VPN: PPP tunneled over SSH: Fast Ethernet Maximum Throughput Rates”. For the minimum TCP/IP payload frame the maximum theoretical rate is 148,810 FPS. For the maximum TCP/IP payload frame the maximum theoretical rate is 8,127 FPS.

The inter-frame gap timing is set to 96 bit times for all types of Ethernet. Bit time is defined as the amount of time required to send one data bit on the network.

Although not shown, the inter-frame gap times for Ethernet (10Mb/sec)(IEEE 802.3) and Gigabit Ethernet (1000Mb/sec) (IEEE 802.3z) are 9.6 microseconds and 96 nanoseconds respectively. The maximum theoretical Ethernet Framing rate for the minimum TCP/IP payload frame is 14,881 FPS. The maximum theoretical Gigabit Ethernet Framing rate for the minimum TCP/IP payload frame is 1,488,100 FPS.

Lets measure the actual data transmission rate on a LAN segment between 2 NST probe systems. The network topology for determining the rate can be found in Figure 6.8, “VPN: PPP tunneled over SSH: Effective data rate: NST Probe - NST Probe same LAN segment”

The ttcp measurement tool was choosen to determine the TCP/IP transfer rate between Probe: A and Probe: B. A fair amount of data was transferred (ie: 4096 buffers at a length of 8192 bytes each resulting in a 33554432 byte transfer).

Actual results from the ttcp tool on Probe: A (10.222.222.84). 33 MBytes of text-based data was transferred in 2.85 seconds.

TTCP Results: Transfer Data From: 10.222.222.84

==== ======== ======== ==== ===== =============

[root@probeA tmp]# /usr/bin/ttcp -t -s -n 4096 10.222.222.87

ttcp-t: socket

ttcp-t: connect

ttcp-t: buflen=8192, nbuf=4096, align=16384/0, port=5001 tcp -> 10.222.222.87

ttcp-t: 33554432 bytes in 2.85 real seconds = 11486.27 KB/sec +++

ttcp-t: 4096 I/O calls, msec/call = 0.71, calls/sec = 1438.24

ttcp-t: 0.0user 0.0sys 0:02real 1% 0i+0d 0maxrss 1+2pf 0+0csw

Actual results from the ttcp tool on Probe: B (10.222.222.87).

TTCP Results: Receive Data On: 10.222.222.87

==== ======== ======= ==== === =============

[root@probeB tmp]# /usr/bin/ttcp -r -s

ttcp-r: socket

ttcp-r: accept from 10.222.222.84

ttcp-r: buflen=8192, nbuf=4096, align=16384/0, port=5001 tcp

ttcp-r: 33554432 bytes in 2.85 real seconds = 11486.27 KB/sec +++

ttcp-r: 23172 I/O calls, msec/call = 0.13, calls/sec = 8122.55

ttcp-r: 0.0user 0.3sys 0:02real 11% 0i+0d 0maxrss 0+1pf 0+0csw

ttcp reports its default data rate in 1024(K) Bytes(B) per sec (KB/sec) units. The actual decimal data rate reported by ttcp on Probe: A is 11486.27 KB/sec * 1.024 = 11,761,940 Bytes/sec. Compare this value to the theoretical maximum rate for Fast Ethernet with a TCP/IP payload of 1448 bytes: 11,767,896 Bytes/sec (with TCP/IP timestamp) as shown in Figure 6.7, “VPN: PPP tunneled over SSH: Fast Ethernet Maximum Throughput Rates”. For all practical purposes, these typical NST probes can transfer data at the Fast Ethernet wire speed.

By default, Linux has the TCP/IP timestamp option turned on "RFC 1323: TCP Extensions for High Performance" to improve performance over large bandwidth*delay product paths and to provide reliable operation over very high-speed paths. The TCP/IP option adds 12 bytes of TCP header information to each TCP/IP packet. By turning off this option, more data traffic can be squeezed into the TCP/IP payload. One can turn off this option for all subsequent TCP/IP traffic with the following command run as root:

[root@probeA tmp]# echo 0 > /proc/sys/net/ipv4/tcp_timestamps

My experience with this option disabled for local and broadband internet connectivity has not shown any network performance degradation. The "-nt" or "--no-tcpip-timestatmp" command line switch option can be used with the vpn-pppssh script to disable TCP/IP timestamping.

Next another test using ttcp was accomplished over the same Fast Ethernet LAN segment. This time the TCP/IP timestamp option was disabled on both NST Probe: A and Probe: B. The results are now presented:

Actual results from the ttcp tool on Probe: A (10.222.222.84) - no TCP/IP timestamps. 33 MBytes of text-based data was transferred in 2.83 seconds..

TTCP Results: Transfer Data From: 10.222.222.84 TCP/IP Timestamps Disabled

==== ======== ======== ==== ===== ============= ====== ========== ========

[root@probeA tmp]# /usr/bin/ttcp -t -s -n 4096 10.222.222.87

ttcp-t: socket

ttcp-t: connect

ttcp-t: buflen=8192, nbuf=4096, align=16384/0, port=5001 tcp -> 10.222.222.87

ttcp-t: 33554432 bytes in 2.83 real seconds = 11582.06 KB/sec +++

ttcp-t: 4096 I/O calls, msec/call = 0.71, calls/sec = 1451.09

ttcp-t: 0.0user 0.0sys 0:02real 2% 0i+0d 0maxrss 1+2pf 0+0csw

Actual results from the ttcp tool on Probe: B (10.222.222.87) - no TCP/IP timestamps.

TTCP Results: Receive Data On: 10.222.222.87 TCP/IP Timestamps Disabled

==== ======== ======= ==== === ============= ====== ========== ========

[root@probeB tmp]# /usr/bin/ttcp -r -s

ttcp-r: socket

ttcp-r: accept from 10.222.222.84

ttcp-r: buflen=8192, nbuf=4096, align=16384/0, port=5001 tcp

ttcp-r: 33554432 bytes in 2.83 real seconds = 11582.06 KB/sec +++

ttcp-r: 22941 I/O calls, msec/call = 0.13, calls/sec = 8108.64

ttcp-r: 0.0user 0.3sys 0:02real 13% 0i+0d 0maxrss 0+2pf 0+0csw

The results for this test with the TCP/IP timestamp option disabled are now discussed. The actual decimal data rate reported by ttcp on Probe: A is 11582.06 KB/sec * 1.024 = 11,860,029 Bytes/sec. Compare this value to the theoretical maximum rate for Fast Ethernet with a TCP/IP payload of 1460 bytes: 11,865,420 Bytes/sec (with no TCP/IP timestamp) as shown in Figure 6.7, “VPN: PPP tunneled over SSH: Fast Ethernet Maximum Throughput Rates”. Once again the transfer rate between these NST probes is practically Fast Ethernet wire speed.

With the TCP/IP timestamp disabled, our results show that an additional 103,480 Bytes/sec (11,865,420 - 11,761,940) can be sent.

A secondary method for determining the actual TCP/IP transfer rate between the probe systems was done. Cisco offers a network monitoring feature called the Switched Port Analyzer (SPAN) on its Switch equipment. One can see from Figure 6.8, “VPN: PPP tunneled over SSH: Effective data rate: NST Probe - NST Probe same LAN segment” that all network traffic on Fast Ethernet port: 8 is being monitored by Fast Ethernet port: 21 using SPAN.

A high-end Linux Redhat System (2 x Intel Xeon 2.8GHz) was used to monitor the SPAN traffic using wireshark (Version: 0.10.0). The wireshark summary view for the TCP/IP traffic captured is shown in Figure 6.9, “VPN: PPP tunneled over SSH: Ethereal capture summary view”. At the maximum Fast Ethernet transfer rate, no packet lossdt was detected with the capture while using this equipment. This capture took place at the identical time we tested the maximum TCP/IP transfer rate with the TCP/IP timestamp option disabled.

From this summary one can see the high utilization of the Fast Ethernet bandwidth. An wireshark display filer was used to focus on data packets associated only with the NST probe systems. In the "Data in filtered packets" section, the "Avg. Mbit/sec" value is: 97.686. Ethereal does not count the "Frame Check Sequence" (CRC) - Cyclic Redundancy Check 4 byte value. A quick calculation of adding the CRC (4 Bytes per packet) and equivalent Inter-Frame Gap (12 Bytes per packet) data value to the "Avg. Mbit/sec" results in a new average value of: 99.20 Mbit/sec = (12,210,700 + ((4+12)*11,869))/12,500,000) * 100Mbit/sec.

Next we will measure the actual effective data transmission rate between 2 NST probe systems across a network segment boundary. 2 VLANs were created within a Layer 3 (L3) Gigabit managed switch: (S2) (D-Link DGS-3308TG). The network topology for this benchmarking measurement can be found in Figure 6.10, “VPN: PPP tunneled over SSH: Throughput Rate: NST Probe - NST Probe Different LAN Segments (2 VLANs)”. As one can see from the results below, packets transmitted by Probe: A, switched through Layer 2 (L2) switch: (S1), routed throught L3 switch: (S2), switched throught L2 switch: (S3), and received by Probe: B approach the theoretical Fast Ethernet wire speed.

This result is important because when we benchmark the throughput rate across a network segment using a PPP over SSH VPN we need to know that the infrastructure networking gear is not causing any unknown bottlenecks in the measurement.

Figure 6.10. VPN: PPP tunneled over SSH: Throughput Rate: NST Probe - NST Probe Different LAN Segments (2 VLANs)

Actual results from the ttcp tool on Probe: A (10.222.222.86) - Transverse network segment boundary with no TCP/IP timestamps. 67 MBytes of text-based data was transferred in 5.70 seconds.

TTCP Results: Transmit Data From: 10.222.222.86 TCP/IP Timestamps Disabled

==== ======== ======== ==== ===== ============= ====== ========== ========

[root@probeA tmp]# ttcp -t -s -n 8192 10.222.221.101

ttcp-t: socket

ttcp-t: connect

ttcp-t: buflen=8192, nbuf=8192, align=16384/0, port=5001 tcp -> 10.222.221.101

ttcp-t: 67108864 bytes in 5.70 real seconds = 11505.45 KB/sec +++

ttcp-t: 8192 I/O calls, msec/call = 0.71, calls/sec = 1438.18

ttcp-t: 0.0user 0.1sys 0:05real 1% 0i+0d 0maxrss 1+2pf 0+0csw

Actual results from the ttcp tool on Probe: B (10.222.221.101) - Transverse network segment boundary with no TCP/IP timestamps.

TTCP Results: Receive Data On: 10.222.221.101 TCP/IP Timestamps Disabled

==== ======== ======= ==== === ============== ====== ========== ========

[root@probeB tmp]# /usr/bin/ttcp -r -s

ttcp-r: socket

ttcp-r: accept from 10.222.222.86

ttcp-r: buflen=8192, nbuf=8192, align=16384/0, port=5001 tcp

ttcp-r: 67108864 bytes in 5.70 real seconds = 11505.45 KB/sec +++

ttcp-r: 46360 I/O calls, msec/call = 0.13, calls/sec = 8128.03

ttcp-r: 0.0user 0.6sys 0:05real 13% 0i+0d 0maxrss 0+1pf 0+0csw

Remember ttcp reports its default data rate in 1024(K) Bytes(B) per sec (KB/sec) units, therefore the actual decimal data rate reported by TTCP for this benchmarking measurement was: 11505.45 KB/sec * 1.024 = 11782 KB/sec.

Finally we will measure the actual effective data transmission rate using a PPP tunneled over SSH VPN between 2 NST probe systems across a network segment boundary. Once again 2 VLANs were created within a Layer 3 (L3) Gigabit managed switch: (S2) (D-Link DGS-3308TG). The network topology for this benchmarking measurement can be found in Figure 6.11, “VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN”.

Different data types will be transmitted over the VPN so that the results could match that of typical real-world data traffic environments. We will benchmark text-based transfers, compressed file data transfers (bzip2 format), and SMB file system transfers.

Packets will be transmitted by Probe: A through the VPN and eventually received by Probe: B.

First we need to setup the VPN. Redhat Linux host: 10.222.222.14 on VLAN: 222 and NST Probe: C (10.222.221.100) on VLAN: 221 will form the endpoints of the VPN. The following parameters to the "vpn-pppssh" script are used to setup the VPN on Redhat Linux host: 10.222.222.14:

[root@redhat tmp]# vpn-pppssh -r 10.222.221.100 -s 10.222.222.31 -c 10.222.222.32 -rt -sn 10.222.221.0/24 \

-cn 10.222.222.0/24 -v -nt

Figure 6.11. VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN

**Note: The network route to VLAN: 221 for Redhat Linux host: 10.222.222.14 is "10.222.222.4". The network route to VLAN: 221 for Probe: A is through: "10.222.222.14". The network route to VLAN: 222 for NST Probe: C is "10.222.221.1". Lastly, the network route to VLAN: 222 for Probe: B is through: "10.222.221.100".

Now that the VPN has been established, we will examine the results of sending text-based data over the VPN between 2 NST probe systems using ttcp.

Actual results from the ttcp tool on Probe: A (10.222.222.86) - Transverse network segment boundary with no TCP/IP timestamps. 67 MBytes of text-based data was transferred in 5.87 seconds.

TTCP (TEXT DATA) Results: Transmit Data From: 10.222.222.86 TCP/IP Timestamps Disabled

==== ===== ===== ======== ======== ==== ===== ============= ====== ========== ========

[root@probeA tmp]# ttcp -t -s -n 8192 10.222.221.101

ttcp-t: socket

ttcp-t: connect

ttcp-t: buflen=8192, nbuf=8192, align=16384/0, port=5001 tcp -> 10.222.221.101

ttcp-t: 67108864 bytes in 5.87 real seconds = 11160.41 KB/sec +++

ttcp-t: 8192 I/O calls, msec/call = 0.73, calls/sec = 1395.05

ttcp-t: 0.0user 0.1sys 0:05real 2% 0i+0d 0maxrss 1+2pf 0+0csw

Actual results from the ttcp tool on Probe: B (10.222.221.101) - Transverse network segment boundary with no TCP/IP timestamps.

TTCP (TEXT DATA) Results: Receive Data On: 10.222.221.101 TCP/IP Timestamps Disabled

==== ===== ===== ======== ======= ==== === ============== ====== ========== ========

[root@probeB tmp]# /usr/bin/ttcp -r -s

ttcp-r: socket

ttcp-r: accept from 10.222.222.86

ttcp-r: buflen=8192, nbuf=8192, align=16384/0, port=5001 tcp

ttcp-r: 67108864 bytes in 5.87 real seconds = 11160.41 KB/sec +++

ttcp-r: 46392 I/O calls, msec/call = 0.13, calls/sec = 7891.94

ttcp-r: 0.0user 0.6sys 0:05real 12% 0i+0d 0maxrss 0+2pf 0+0csw

From the ttcp results, one can see that a high effective data rate can be acheived when sending text-based data through the VPN. The pppd daemon uses a "deflate" compression scheme which is very effective with text-based data. By default this compression scheme is enabled when starting up the pppd daemon which is called by the "vpn-pppssh" script.

The next benchmark test will be to send a 6 MByte "bzip2" compressed file over the VPN from Probe: A (10.222.222.86). This transfer took 1.06 seconds.

TTCP (COMPRESSED FILE) Results: Transmit Data From: 10.222.222.86 TCP/IP Timestamps Disabled

==== =========== ===== ======== ======== ==== ===== ============= ====== ========== ========

[root@probeA tmp]# ttcp -t 10.222.221.101 < /tmp/xxx.bz2

ttcp-t: socket

ttcp-t: connect

ttcp-t: buflen=8192, nbuf=2048, align=16384/0, port=5001 tcp -> 10.222.221.101

ttcp-t: 6066949 bytes in 1.06 real seconds = 5584.98 KB/sec +++

ttcp-t: 741 I/O calls, msec/call = 1.47, calls/sec = 698.50

ttcp-t: 0.0user 0.0sys 0:01real 1% 0i+0d 0maxrss 0+2pf 0+0csw

Actual results from the ttcp tool on Probe: B (10.222.221.101) - Transverse network segment boundary with no TCP/IP timestamps. These results reflect the compressed file being transferred.

TTCP (COMPRESSED FILE) Results: Receive Data On: 10.222.221.101 TCP/IP Timestamps Disabled

==== =========== ===== ======== ======= ==== === ============== ====== ========== ========

[root@probeB tmp]# /usr/bin/ttcp -r > /tmp/xxx.bz2

ttcp-r: socket

ttcp-r: accept from 10.222.222.86

ttcp-r: buflen=8192, nbuf=2048, align=16384/0, port=5001 tcp

ttcp-r: 6066949 bytes in 1.07 real seconds = 5532.37 KB/sec +++

ttcp-r: 4197 I/O calls, msec/call = 0.26, calls/sec = 3919.04

ttcp-r: 0.0user 0.0sys 0:01real 7% 0i+0d 0maxrss 0+1pf 0+0csw

Since the source of the data to be transferred is compressed to start with, the pppd daemon's "deflate" compression scheme will not increase the effective data throughput rate. These results can serve as the baseline for the lower end of the effective data throughput rate over the VPN when the data to be transferred is primarily compressed. For this benchmark measurement, the effective thoughput rate for transferring compressed data over the VPN is slightly less than one-half of the maximum theoretical Fast Ethernet line speed.

Many factors contibute to the results of this effective data throughput rate for transferring compressed data over the VPN: 1) overhead associated with the tunnelling data (discussed above); 2) simultaneous TCP/IP acknowledgements to both the file transfer sender and VPN end-point recipient occurring on the same physical network interface; and 3) inherent delays associated with routing packets within the Linux OS between different network interfaces (i.e. ppp0 <=> eth0).

Our final benchmarking test will consist of sending the SMB (Service Message Block) protocol or the Common Internet File System (CIFS), LanManager, or NetBIOS protocol over the VPN. The network topology for this measurement is shown in Figure 6.12, “VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN for SMB file services”.

Figure 6.12. VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN for SMB file services

The notebook system (NIC0: 10.222.222.18) has been changed back to running the Windows XP Professional OS. We will start up Samba on Probe: B and send files using SMB over the VPN.

The protocol analyzer wireshark was used on the Redhat Linux system to capture packets on network interface "eth1". Once again this interface did not have an assigned binding IP address, thus it was in "stealth" mode. Network interface "eth1" was connected to a SPAN port: 21 on Switch: S1. This SPAN port: 21 was configured to monitor all network traffic activity occurring on Switch: S1 port: 11.

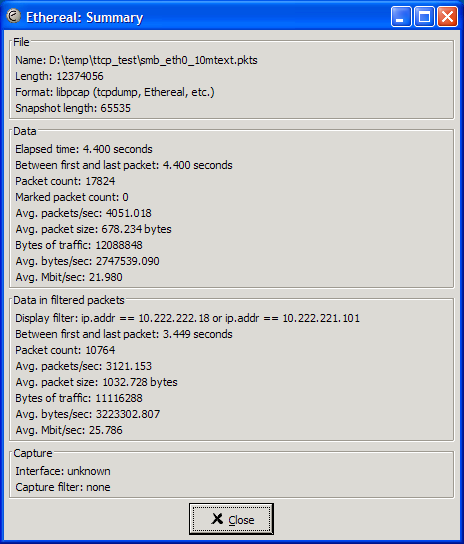

The summary below Figure 6.13, “VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN for SMB file services - Ethereal capture summary: 1” describes the results from sending a 10 MByte text-based file using SMB files services from system Probe: B to the Windows XP Professional system.

Figure 6.13. VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN for SMB file services - Ethereal capture summary: 1

Setting a display filter in wireshark (Version: 0.10.3) for only showing data between hosts: 10.222.222.18 (Windows XP) and 10.222.221.10 (Probe: B) was done. The wireshark display filter syntax to do this was: "ip.addr == 10.222.222.18 or ip.addr == 10.222.221.101". The results for this display filter can be seen in the summary window under "Data in filtered packets". This section tells us that is took 3.449 seconds to send the 10 MByte text-based file using SMB over the VPN. The file transfer plus any SMB overhead (a total of 11116288 bytes of data) had an effective throughput rate of 3223.30 KB/sec.

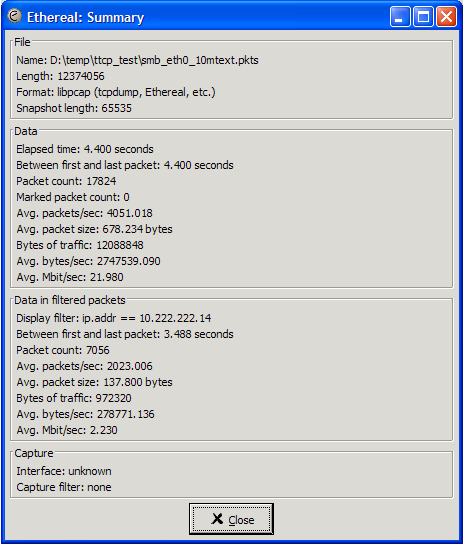

Figure 6.14. VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN for SMB file services - Ethereal capture summary: 2

The summary above Figure 6.14, “VPN: PPP tunneled over SSH: Effective data Rate: NST Probe - NST Probe different LAN segments (2 VLANs) over the VPN for SMB file services - Ethereal capture summary: 2” shows the results of the same data with a different wireshark display filter that focused on the VPN traffic only. The wireshark display filter syntax to accomplish this was: "ip.addr == 10.222.222.14". The results for this display filter can once again be seen in the summary window under "Data in filtered packets". This section tells us that is took 3.488 seconds to send the 10 MByte text-based file over the VPN. Only 972320 bytes of data was sent over the VPN between the two VPN end-points. It is clearly evident here that the text-based data is being compressed by the "pppd" daemon with a factor of over 11 to 1.

In summary, the make-up or entropy of the data being sent through the PPP tunneled over SSH VPN will dictate the effective throughput rate. Hopefully the analysis presented here can be used to determine if this type of VPN configuration is appropriate for your environment.